What is a Convolutional Neural Network?

A Convolutional Neural Network, or CNN, is a special type of artificial neural network (a deep learning algorithm) used to recognize images and visual information. It is one of the key techniques in image recognition and object detection.

It is used for applications such as:

- Recognizing faces in photos

- Distinguishing between cats and dogs

- Reading handwritten digits

- Analyzing medical images like X-rays

- Identifying traffic signs for self-driving cars

Deep learning is a branch of machine learning where networks consist of many layers that learn independently from data.

A Convolutional Neural Network is designed to learn, just like the human eye, what to look for in an image. Instead of examining each pixel individually (as a standard neural network does), a CNN searches for patterns in small sections of the image. This allows it to work much more efficiently and accurately.

Why Use CNNs?

Traditional neural networks (like fully connected networks) are not scalable for large images, as they treat every pixel as a separate input, resulting in massive networks with too many parameters.

When you feed an image to a computer, it only sees a collection of numbers (pixels). A regular computer does not understand what a cat or a traffic sign is. A CNN learns to recognize what is important, such as:

- Shapes of ears or eyes

- Contrast between light and dark areas

- Patterns like lines, edges, or colors

A significant advantage is that a CNN learns which features are essential, without any explicit human programming. It examines thousands of example photos of cats, dogs, and other animals, understanding the differences step by step.

How Does a CNN Work?

You can compare it to how we look at something:

Step 1: Looking at fragments of the image

The CNN does not look at the entire image at once, but at small segments (e.g., 3x3 or 5x5 pixel squares). This allows it to learn, for example, 'Ah, there is an edge here' or 'this looks like an eye.'

Step 2: Skipping unimportant information

After recognizing small fragments, the CNN discards less critical information (e.g., background) to work more efficiently and use less space.

Step 3: Making a decision

Ultimately, the network combines all the information and states, for example, 'I think this is a cat with 95% certainty.'

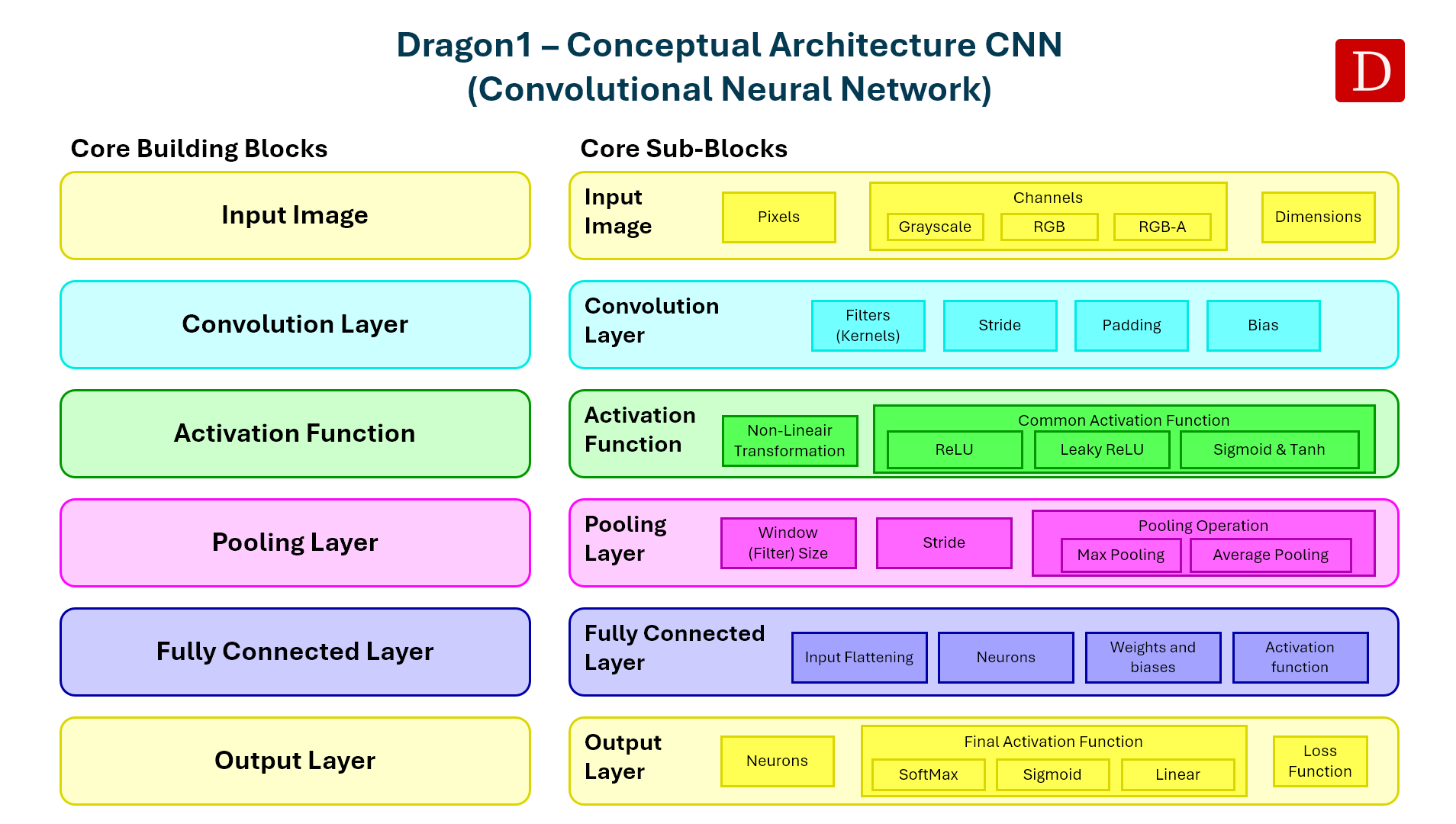

Architecture of a Convolutional Neural Network

A CNN consists of multiple layers that work together to understand more about an image gradually. The combination of convolution, ReLU, and pooling is often repeated multiple times.

This allows the network first to learn simple features (such as lines) and then progressively more complex shapes (such as eyes or objects). Common layers include:

- Convolutional Layer: A small filter slides over the image and recognizes patterns such as edges or corners. This produces a feature map: a map of where specific patterns were found.

- ReLU (Rectified Linear Unit): Activates the network by allowing only positive signals to pass: f(x) = max(0, x). Adds non-linearity, enabling the network to learn complex patterns.

- Pooling Layer: Simplifies the data by reducing its size, e.g., using max pooling (picking the largest value in a region). For example, of [4, 7, 1, 5], only the 7 is retained. Helps reduce data size, increases speed, and helps prevent overfitting.

- Repeated Conv + ReLU + Pooling: Often used multiple times to capture increasingly abstract features (from edges to full objects).

- Flattening Layer: Flattens the 2D feature maps into a 1D vector to be fed into the final layers.

- Fully Connected Layer: Combines all learned information to make a prediction.

- Output Layer: Produces the final result, often using a softmax function to calculate probabilities.

Practical Examples

Example 1: Recognizing Objects with Google Lens

- You point your phone camera at an object, for example, a flower, a shoe, a pet, or a building.

- Google Lens analyzes the image in real-time using a Convolutional Neural Network (CNN) trained on millions of labeled images.

- The CNN identifies patterns in the image (such as color, shape, and texture (fur patterns) and compares them to known categories.

- It outputs a prediction, such as:

- 'This is a hibiscus flower.'

- 'These are Nike Air Max sneakers.'

- 'This is a Labrador Retriever.'

- 'This is the Pantheon in Rome.'

This is useful for visually searching instead of typing, identifying unknown objects, translating text in images, or scanning products to shop online.

Example 2: Traffic Sign Recognition in Self-Driving Cars

- A self-driving car is equipped with one or more cameras that continuously capture images of the road and environment.

- Each frame (image) is passed through a CNN that has been trained on a large dataset of traffic signs from different countries and weather conditions.

- The CNN analyzes small patches of the image to identify key patterns, such as:

- The circular shape of a sign

- Colors like red, white, and blue

- Symbols or numbers, such as a speed limit or a no-entry icon

- Once a sign is detected, the CNN classifies it into one of the known categories, such as:

- 'Stop Sign'

- 'Speed Limit 50'

- 'No Entry'

- 'Pedestrian Crossing'

- The car’s navigation system uses this information to make decisions:

- Slow down or stop

- Change direction

- Warn the driver or take action automatically

This process happens in real time, allowing the car to respond instantly to road signs and improve traffic safety.

Example 3: Reading Handwritten Digits or Text

- A handwritten digit or word is written on paper, a touchscreen, or captured in a photo.

- The image is converted into grayscale and resized to a standard size (for example, 28x28 pixels for digits).

- The image is sent through a trained Convolutional Neural Network (CNN), often trained on datasets like MNIST (for digits) or EMNIST (for letters and symbols).

- The CNN performs the following:

- Detects basic patterns like curves, vertical lines, and closed loops

- Combines these features into more complex shapes (like the top of a '3' or the tail of a '9')

- The final layers of the CNN determine which digit or character it most likely represents, for example:

- “This is a '7' with 98% confidence

- “This is the letter 'B' with 95% confidence

- The result is passed on to the application, such as:

- Filling in a form automatically

- Sorting mail by postcode

- Solving handwritten math problems in an educational app

This approach is widely used in Optical Character Recognition (OCR) systems and helps digitize written content accurately and efficiently. Modern systems can even handle messy handwriting by training CNNs on augmented data and a wide variety of writing styles.

Example 4: Detecting Cancer in Medical Images

- A patient gets a medical scan, such as a chest X-ray or a mammogram.

- The image is analyzed by a CNN that has been trained on thousands (or millions) of labeled medical images.

- The CNN looks for specific visual patterns associated with diseases, for example:

- Abnormal shapes or densities in tissues

- Unusual textures or asymmetries

- Microcalcifications or lesions

- The output could be:

- 'Suspicious region in upper left quadrant (87% confidence)'

- 'Possible signs of lung cancer'

- 'No abnormalities detected'

Doctors use these CNN predictions to support their diagnosis, improve accuracy, and reduce the chance of missing early signs of cancer.

Key Terms Explained

| Term | Meaning |

| Convolution | A sliding window that detects patterns in an image |

| Filter/Kernels | Small matrices used to detect edges, textures, etc. |

| Pooling | Summarizes data by selecting the most important values |

| Feature Map | A map showing where features were detected |

| Fully Connected Layer | Neurons that combine all learned features to predict the output |

| ReLU | An activation function that keeps only positive signals |

Why Are CNNs Powerful?

- They have far fewer parameters than regular networks because they share weights across the image.

- They are excellent at detecting patterns, even when slightly shifted or distorted.

- They are good at recognizing patterns, even if they are slightly shifted or distorted.

- They can automatically learn what is important, without us having to tell them

A disadvantage is that convolutional neural networks require a lot of data and computing power to train effectively.

Interesting Things to Discover

Discover more pages to help you discover interesting things you can do with Dragon1 software.