CxO Briefing: Cloud Financial Ops (FinOps) & IT Operations

20% Reduction in Cloud Overspend via Predictive ML/AI Blueprint

Infrastructure Capacity Planning (IT Ops)

How to achieve proactive and automated cloud capacity optimization?

The Dragon1 AI BPMN Process Architect integrated Machine Learning models for predictive capacity planning, collapsing cloud overspend by automatically right-sizing resources based on future demand forecasts.

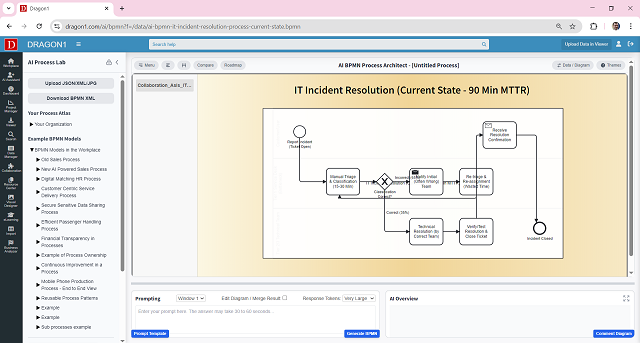

1. Current State (As-Is) - Manual/Reactive Capacity Management

20% Cloud Overspend | Quarterly Reviews

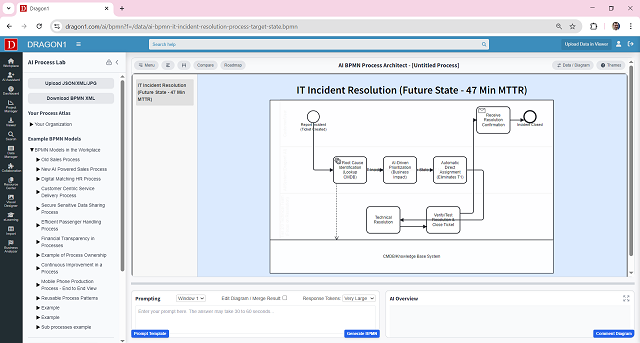

2. Future State (To-Be) - Predictive ML-Driven Capacity Planning

Optimized Spend | Real-time Resource Alignment

Immediate Payback Justification

30% Efficiency Gain: The Cost of Doing Nothing

20%

Reduction in unnecessary cloud infrastructure spend (Cloud Overspend).

95%

Accuracy in predicting future resource needs based on business forecasts.

Real-Time

Infrastructure alignment with demand, eliminating capacity bottlenecks and waste.

The Enterprise Result: Financial & Operational Metrics

20%

Lower Cloud Overspend & Optimized Budget.

ML models ensure resources are scaled down during low-demand periods, directly impacting the bottom line.

Predictive Scaling

Proactive Resource Provisioning.

Infrastructure is scaled before peak demand hits, avoiding performance degradation and user impact.

Automated FinOps

Infrastructure Cost Transparency and Control.

The documented BPMN model embeds automated cost analysis and optimization checks into the core planning cycle.

Detailed Process Comparison: Before and After AI

1. Current State (As-Is): The Quarterly/Manual Capacity Lag

The initial process relied on human review of lagging indicators, resulting in either expensive over-provisioning or reactive scaling during incidents, leading to 20% consistent cloud overspend.

| Manual Forecasting | Capacity forecasts were based on quarterly reviews and simple linear projections, missing seasonal and real-time business changes. | High risk of over-provisioning (Cloud Overspend) or under-provisioning (Performance Issues). |

| Reactive Scaling | Resource scaling typically happened only after performance thresholds were breached, impacting user experience. | Service degradation during peak loads; wasted spend during troughs. |

2. Future State (To-Be): The Predictive ML-Optimized Blueprint

The Dragon1 AI BPMN Process Architect generated the Future State model, embedding Machine Learning for demand prediction, achieving a significant reduction in waste and a 20% cut in cloud overspend.

| ML Demand Prediction | Machine learning models predict future needs based on granular historical data and real-time business metrics. | Resources are proactively provisioned at the optimal size and time, eliminating the 'guesswork' overspend. |

| Automated Right-Sizing | The system automatically adjusts resource types, sizes, and reservations based on the ML forecast and observed usage patterns. | Continuous cost optimization without human intervention; guaranteed cost-efficiency. |